Q&A 6 How do you visualize model evaluation results from CSV?

6.1 Explanation

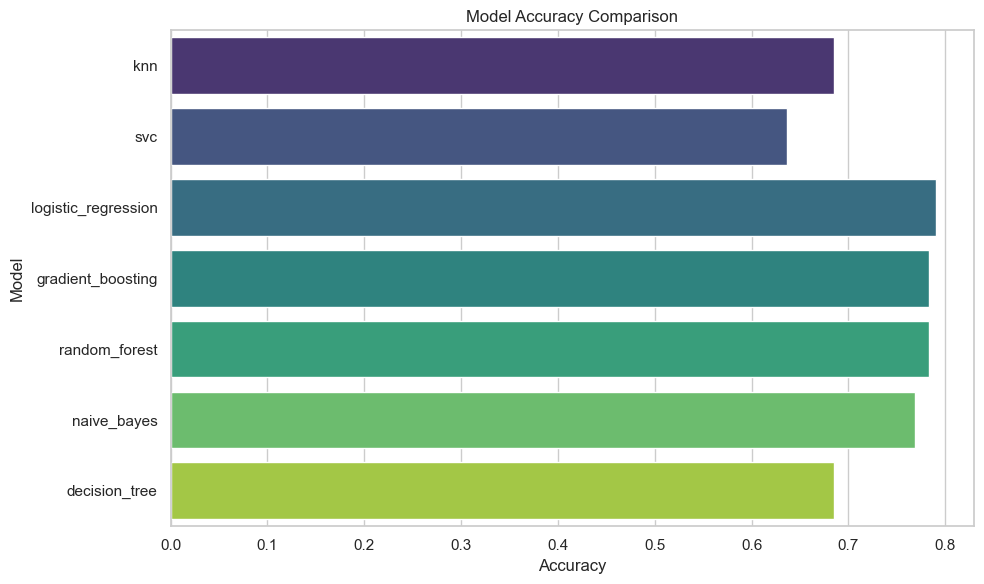

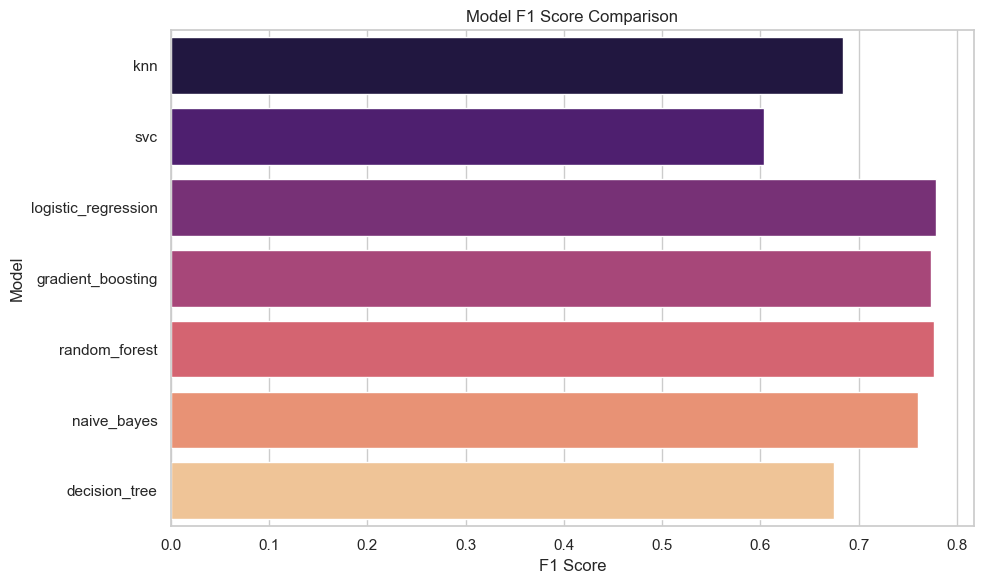

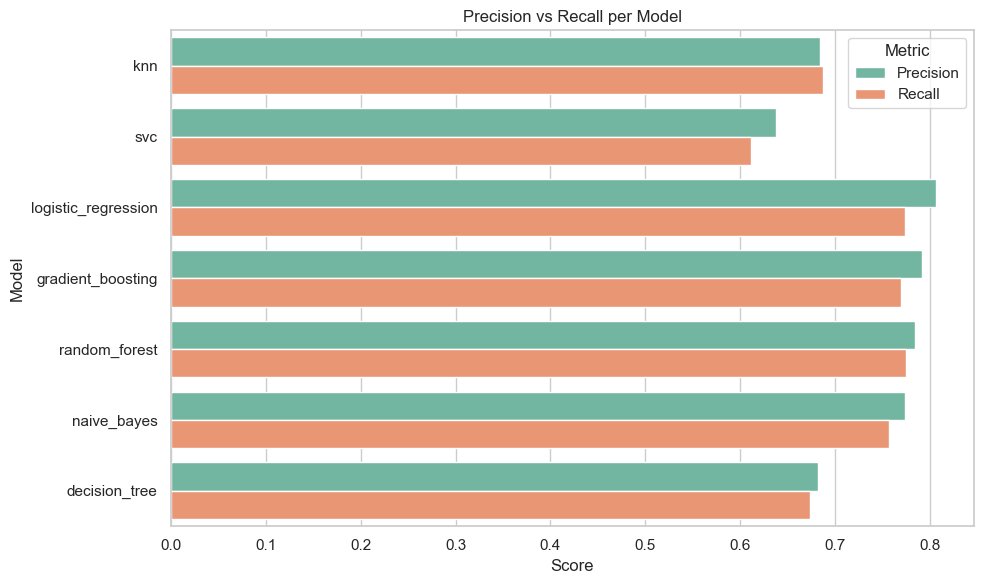

Once you’ve evaluated and stored model metrics (accuracy, precision, recall, F1) in a CSV file like evaluation_summary.csv, the next step is to visualize them for quick comparison.

Visualization helps: - Identify the best-performing model - Spot trade-offs (e.g., higher precision vs lower recall) - Communicate results to others

We’ll use Python’s pandas, matplotlib, and seaborn to create performance bar plots.

6.2 Python Code

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

# Load evaluation summary

df = pd.read_csv("evaluation_summary.csv")

# Set style

sns.set(style="whitegrid")

plt.figure(figsize=(10, 6))

# Plot Accuracy

sns.barplot(x="Accuracy", y="Model", data=df, palette="viridis")

plt.title("Model Accuracy Comparison")

plt.tight_layout()

# plt.savefig("accuracy_plot.png")

plt.show()

# Plot F1 Score

plt.figure(figsize=(10, 6))

sns.barplot(x="F1 Score", y="Model", data=df, palette="magma")

plt.title("Model F1 Score Comparison")

plt.tight_layout()

# plt.savefig("f1_score_plot.png")

plt.show()

# Compare Precision vs Recall

df_melted = df.melt(id_vars="Model", value_vars=["Precision", "Recall"],

var_name="Metric", value_name="Score")

plt.figure(figsize=(10, 6))

sns.barplot(x="Score", y="Model", hue="Metric", data=df_melted, palette="Set2")

plt.title("Precision vs Recall per Model")

plt.tight_layout()

# plt.savefig("precision_recall_plot.png")

plt.show()

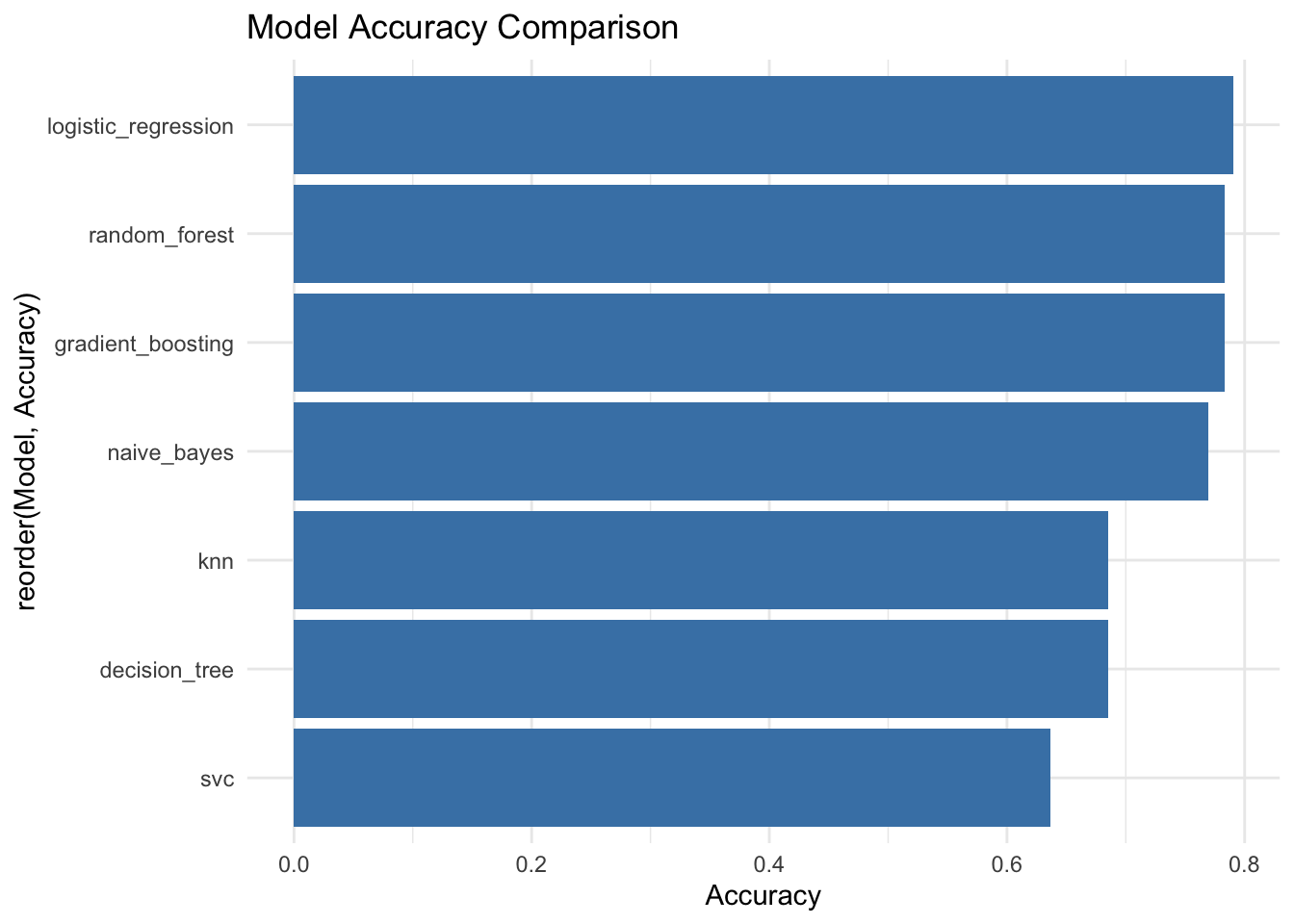

6.3 R Code

# For R visualization, use ggplot2:

df <- read.csv("evaluation_summary.csv")

library(ggplot2)

ggplot(df, aes(x = Accuracy, y = reorder(Model, Accuracy))) +

geom_col(fill = "steelblue") +

theme_minimal() +

labs(title = "Model Accuracy Comparison")

✅ Takeaway: Visualizing model metrics makes it easier to select, explain, and justify your deployment choice. Graphs tell the story your numbers started.